AI News Feed

Google’s Nano Banana Pro mimics phone photos

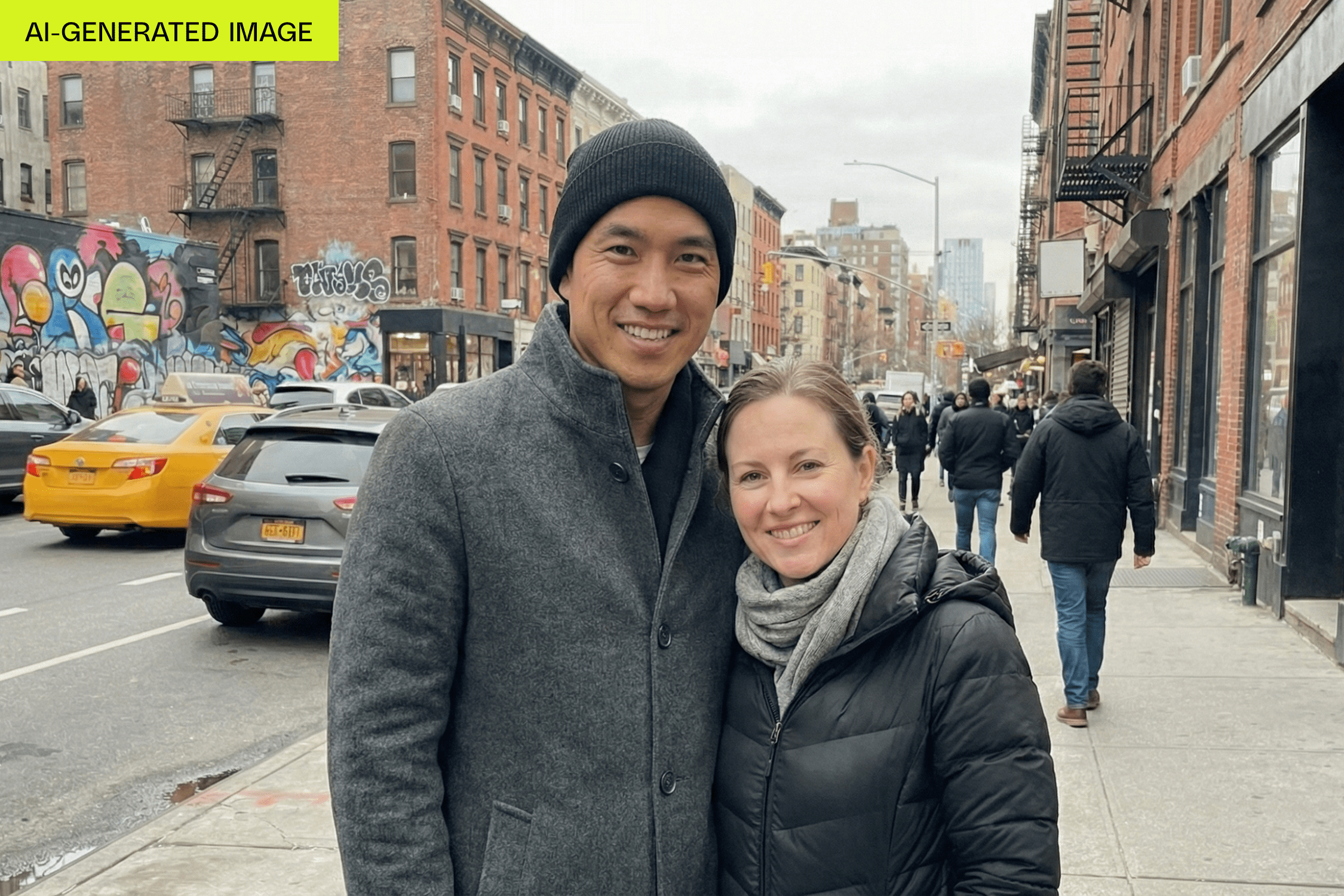

05 Dec 2025- Google’s Nano Banana Pro generates AI images that mimic smartphone imperfections and add subtle authenticity cues — watermarks, props, textures — making fake photos harder to detect.

05 Dec 2025

Google’s Nano Banana Pro is producing AI images that look strikingly like phone photos — not because they’re hyper‑polished, but because they mimic the small imperfections and processing choices of smartphone cameras. Allison Johnson reports that Nano Banana Pro renders bright, flat exposures, heavy sharpening, modest depth of field, and sensor-like texture (noise) that make images feel authentic while hiding typical “AI tells.” Ben Sandofsky of Halide notes the model’s “aggressive image sharpening” and realistic texture that help images “pop.”

Google says Nano Banana Pro doesn’t use Google Photos; Elijah Lawal (Gemini communications) says the model can connect to Google Search (text-only) to ground outputs, which may let it add contextually appropriate details automatically. Johnson found examples where the model inserted period‑correct clothing, cars, and even a Northwest Multiple Listing Service watermark when asked to create a fake Zillow listing — a detail DeepMind product manager Naina Raisinghani called a hallucination, though the watermark looks intentional.

The concern: Nano Banana Pro is getting better at adding subtle authenticity cues (watermarks, logos, chyrons, plausible props), making AI images far harder to detect. As Johnson puts it, that moment when you can’t trust an unfamiliar photo may already be here — “tune your AI radar,” she warns.

Source

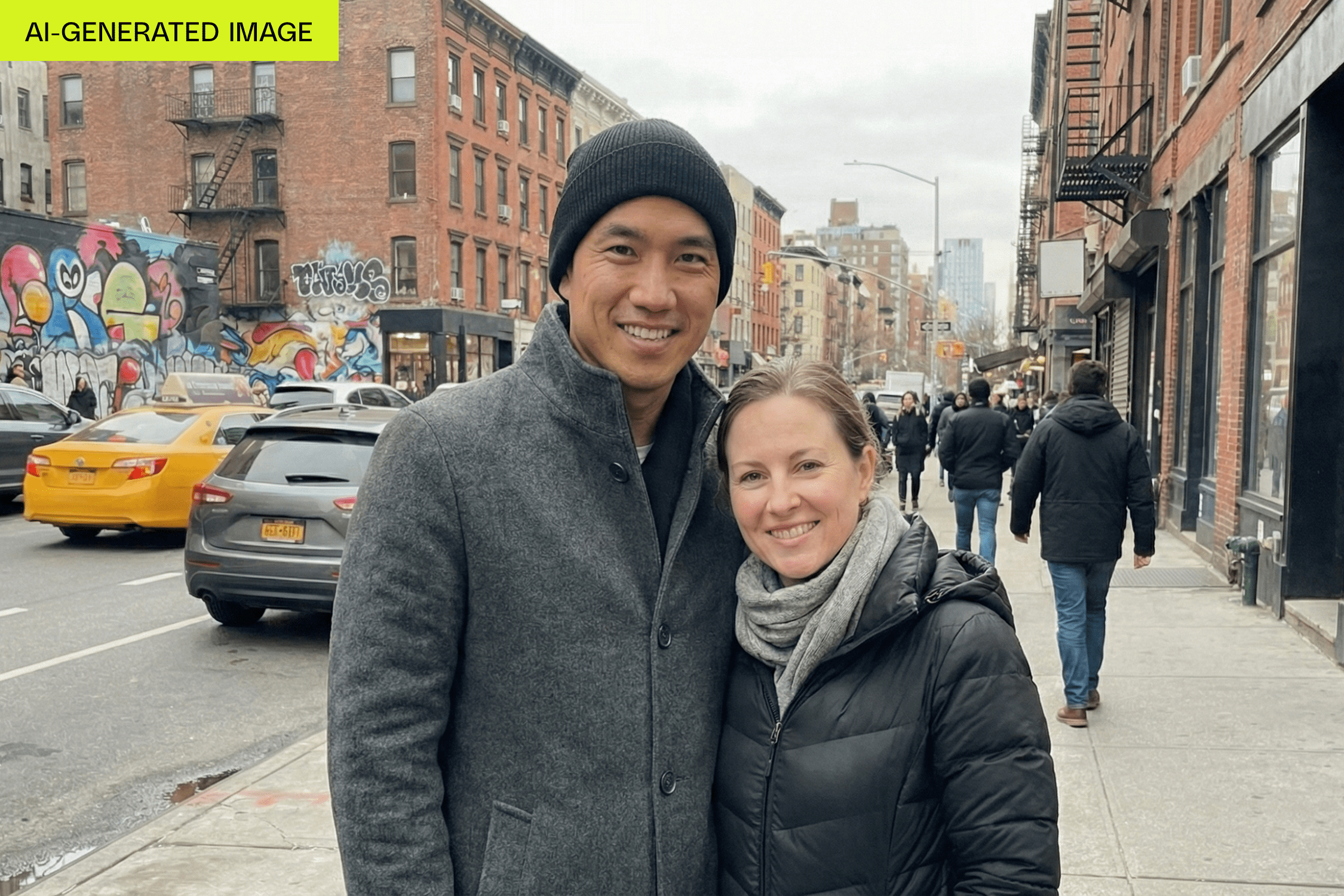

Google’s Nano Banana Pro is producing AI images that look strikingly like phone photos — not because they’re hyper‑polished, but because they mimic the small imperfections and processing choices of smartphone cameras. Allison Johnson reports that Nano Banana Pro renders bright, flat exposures, heavy sharpening, modest depth of field, and sensor-like texture (noise) that make images feel authentic while hiding typical “AI tells.” Ben Sandofsky of Halide notes the model’s “aggressive image sharpening” and realistic texture that help images “pop.”

Google says Nano Banana Pro doesn’t use Google Photos; Elijah Lawal (Gemini communications) says the model can connect to Google Search (text-only) to ground outputs, which may let it add contextually appropriate details automatically. Johnson found examples where the model inserted period‑correct clothing, cars, and even a Northwest Multiple Listing Service watermark when asked to create a fake Zillow listing — a detail DeepMind product manager Naina Raisinghani called a hallucination, though the watermark looks intentional.

The concern: Nano Banana Pro is getting better at adding subtle authenticity cues (watermarks, logos, chyrons, plausible props), making AI images far harder to detect. As Johnson puts it, that moment when you can’t trust an unfamiliar photo may already be here — “tune your AI radar,” she warns.

Source