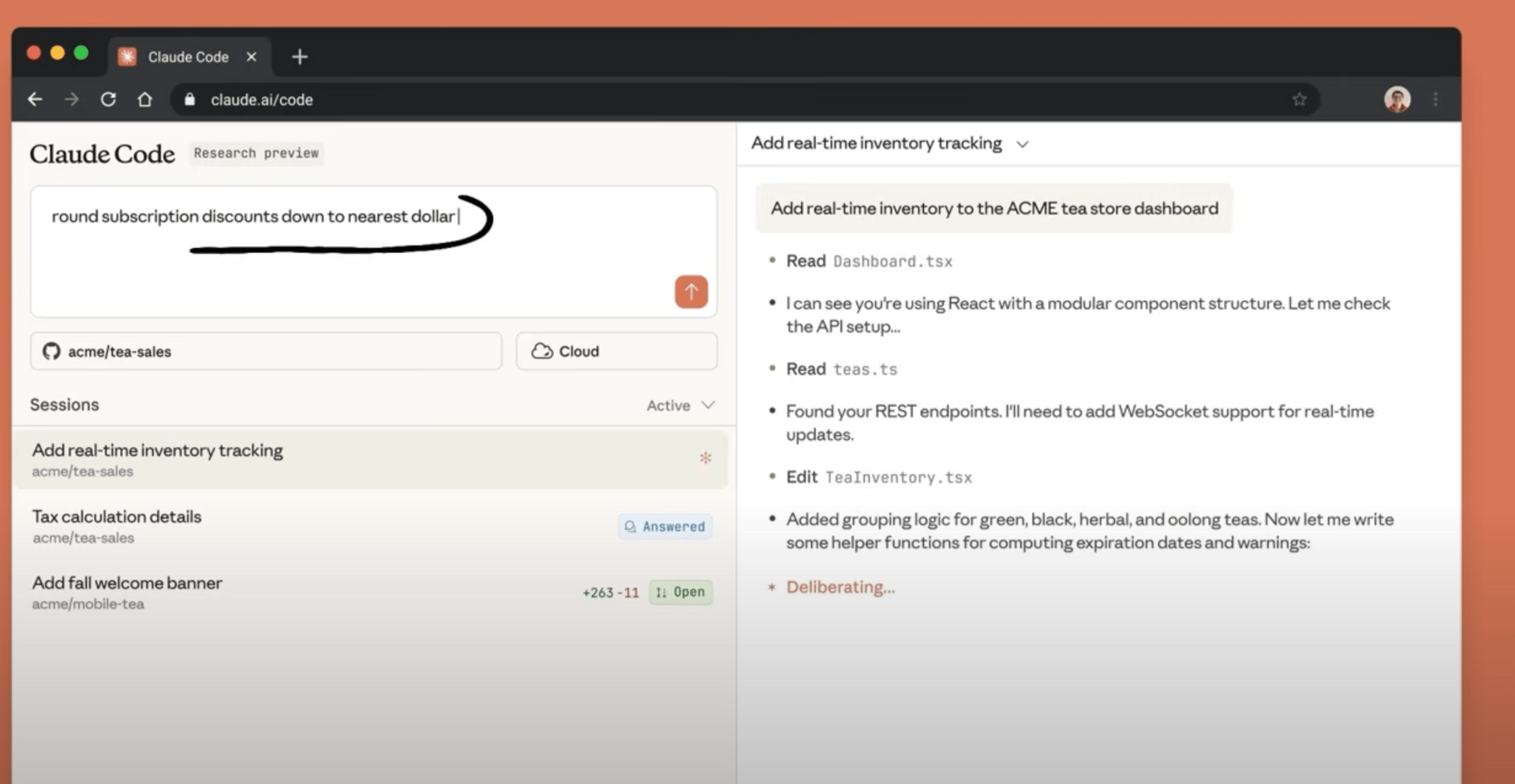

Claude Code's sandbox reduces permission prompts

22 Oct 2025- Anthropic launched Claude Code: a sandboxed coding agent isolating filesystem and network access, cutting permission prompts 84%, proxying git credentials, running isolated cloud sessions, and open‑sourcing the sandbox.

Anthropic released Claude Code on the web with a new sandboxing model intended to reduce permission prompts and harden security for AI-driven coding tasks. Instead of asking the user to approve every filesystem or network action, Claude Code defines bounded sessions (a fenced “playground”) where the model can operate freely inside allowed directories and approved domains.

The sandbox enforces filesystem isolation (project directory access only, sensitive files like SSH keys blocked), network isolation (connections limited to whitelisted domains at the OS level), and real‑time alerts when the agent tries to leave its boundary. Anthropic reports internal tests showing the approach cut permission prompts by 84%, addressing “approval fatigue” that leads users to mindlessly approve or dangerously skip permissions.

Claude Code sessions run in isolated cloud environments so you can run parallel fixes, work on remote repos, or code from mobile devices. Git credentials never enter the sandbox — a proxy verifies git operations — so compromised sandbox code shouldn’t expose your real credentials. Anthropic has open‑sourced the sandbox code, and links to technical docs and Claude Skills to enable similar agent workflows.