AI News Feed

AI Image Generators Mimic Phone-Photo Imperfections

15 Dec 2025- AI image models mimic phone-camera imperfections to boost realism, making fakes harder to spot and prompting cryptographic credentials (C2PA, Pixel signing) and platform verification.

15 Dec 2025

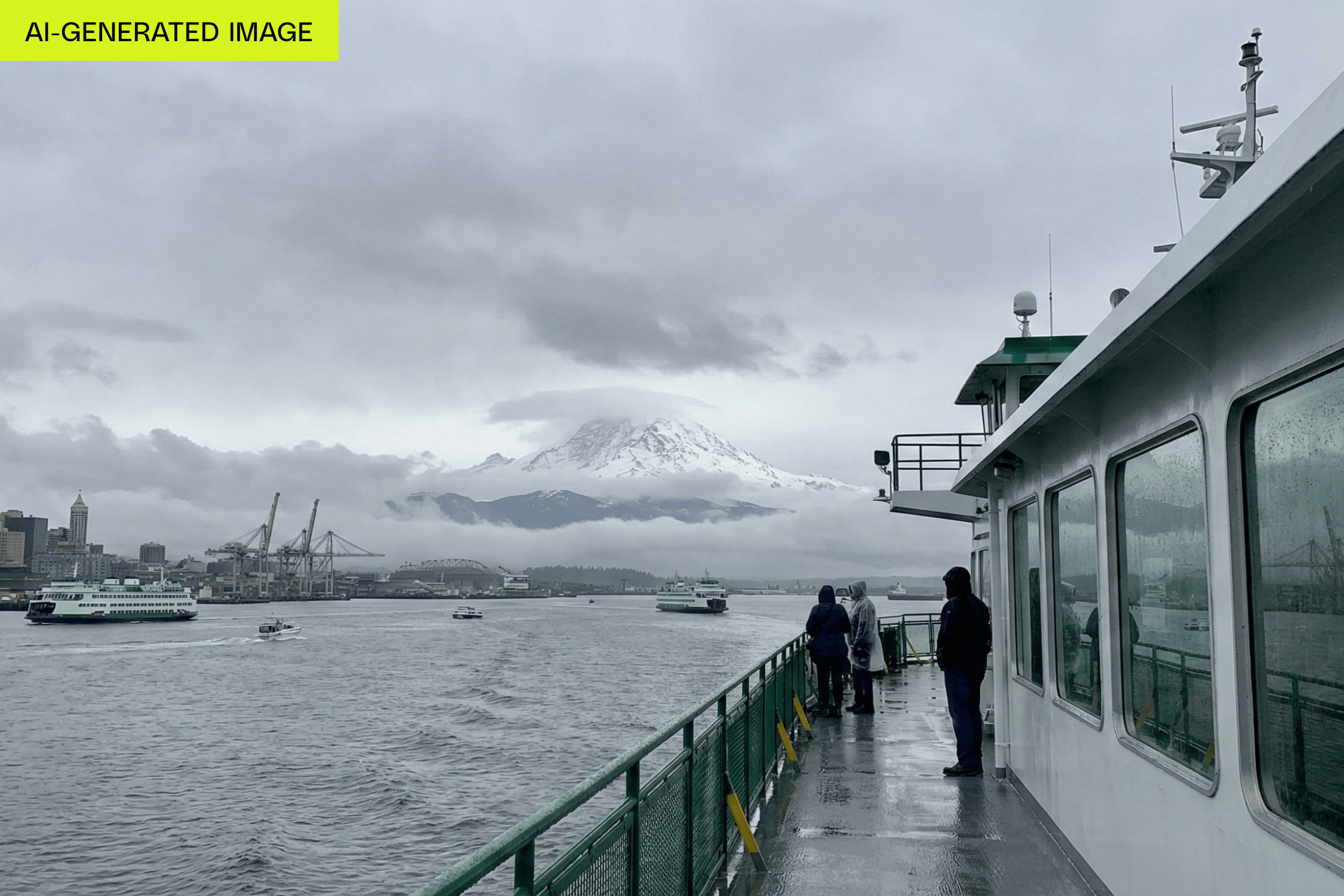

AI image generators are shedding their obvious “AI” look by deliberately imitating the messiness of real photography. Allison Johnson explains that newer models — notably Google’s Nano Banana and its Nano Banana Pro — have started producing outputs that mimic phone-camera artifacts (contrast, sharpening, exposure and multiframe processing), which actually makes fakes feel more believable. The article traces the arc from early DALL·E and Midjourney outputs (with blatant errors like extra fingers) to today’s models that preserve individual likenesses better and trade polished stylization for real-world imperfections.

This trend isn’t limited to Google: Adobe’s Firefly offers a “Visual Intensity” control to dial down the glowy AI aesthetic, and Meta has “Stylization” settings. Video tools (OpenAI’s Sora 2, Google’s Veo 3) can convincingly mimic low-res CCTV or other familiar formats. That realism raises verification challenges, so Johnson highlights C2PA’s Content Credentials and Google Pixel 10’s cryptographic image signing as one approach to avoid the “implied truth effect” — Pixel labels both AI and non-AI images. As Ben Sandofsky of Halide puts it, by copying phone-photo processing, “Google might have sidestepped around the uncanny valley.” The piece argues we’ll need credentialing and platform adoption to keep up as generated imagery becomes harder to spot.

Source

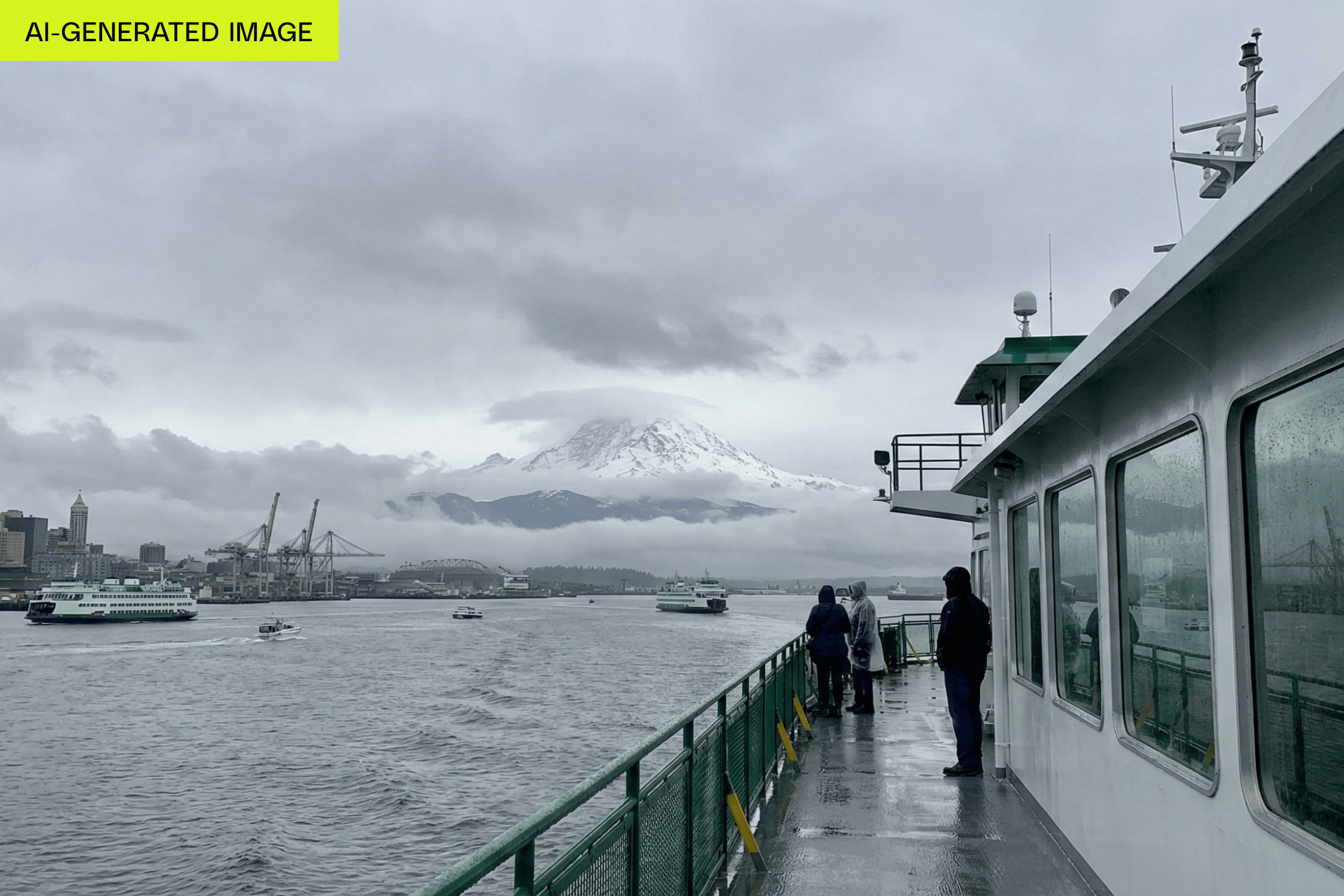

AI image generators are shedding their obvious “AI” look by deliberately imitating the messiness of real photography. Allison Johnson explains that newer models — notably Google’s Nano Banana and its Nano Banana Pro — have started producing outputs that mimic phone-camera artifacts (contrast, sharpening, exposure and multiframe processing), which actually makes fakes feel more believable. The article traces the arc from early DALL·E and Midjourney outputs (with blatant errors like extra fingers) to today’s models that preserve individual likenesses better and trade polished stylization for real-world imperfections.

This trend isn’t limited to Google: Adobe’s Firefly offers a “Visual Intensity” control to dial down the glowy AI aesthetic, and Meta has “Stylization” settings. Video tools (OpenAI’s Sora 2, Google’s Veo 3) can convincingly mimic low-res CCTV or other familiar formats. That realism raises verification challenges, so Johnson highlights C2PA’s Content Credentials and Google Pixel 10’s cryptographic image signing as one approach to avoid the “implied truth effect” — Pixel labels both AI and non-AI images. As Ben Sandofsky of Halide puts it, by copying phone-photo processing, “Google might have sidestepped around the uncanny valley.” The piece argues we’ll need credentialing and platform adoption to keep up as generated imagery becomes harder to spot.

Source