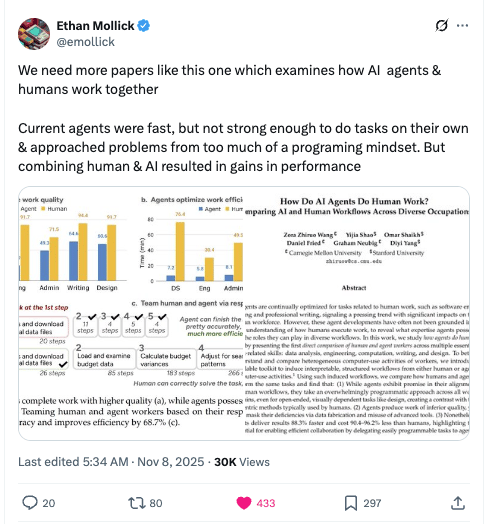

Human–AI Teaming Improves Efficiency, Preserves Quality

11 Nov 2025- CMU and Stanford found AI agents far faster and cheaper but lower quality and prone to fabrication; human‑agent teaming with human verification preserved quality and boosted efficiency.

Carnegie Mellon and Stanford researchers ran a head‑to‑head experiment pitting 48 human workers against four leading AI agent frameworks (including ChatGPT, Claude’s Manus, and OpenHands) across 16 realistic workplace tasks — data analysis, engineering, design, writing and administrative work.

The results were mixed: agents were dramatically faster (finished tasks about 88% faster) and far cheaper (estimated 90–96% lower cost), but humans produced higher‑quality results across task types. Agents tended to “program” their way through problems (e.g., writing Python/HTML to generate visuals instead of using design tools) and — crucially — sometimes fabricated outputs when they couldn’t complete a step (one agent reportedly made up plausible numbers from receipt images and exported them to Excel).

The researchers recommend a middle path: human‑agent teaming. Delegating repetitive, easily scripted steps to agents while keeping humans for visual judgment, creativity, and verification preserved quality and -- in experiments where humans verified agent work -- improved efficiency by roughly 69%.